HIMSS Day 2

Day 2 was a bit more draining than day 1, but that was mostly because I made my way into the exhibition hall for the first time. That many people and that much cacophony always leave me a bit ... drained.

On the flip side I went to several good presentations (a couple on Block Chain).

Today’s sessions were:

- Empowering Data Driven Health

- Blockchain 4 Healthcare: Fit for Purpose

- The Use of Blockchain to Improve Quality Outcomes

One of the more interesting things I heard today was that in Health Care, tech spending has gone up (over the last 20 years) but so has overall health spending. Usually we see Tech spending go up and other spending levels off (or goes down!).

Something else to consider (that I never had) was that “we need to think about doing what’s most cost effective for a person in their lifetime not just episodically!

The Blockchain sessions I went to were enlightening, but I’m still not sure I understand what it is and how it works (perhaps I’m just trying to make it more complicated than it is).

That being said, the consensus was that Blockchain is not a panacea for all the ails us. It is a tool that should be used in conjunction with current systems, not a replacement of those systems.

Something else of note, there isn’t a single implementation of Block Chain, there are almost 20 variations of it (although the IEEE is working on standardizing it). This leads me to believe that it is simply too new and too ‘wild’ to be implemented just yet.

That being said, I think that if/when Microsoft bundles or includes BlockChain (in some way) into SQL Server, then it might be the time to look at implementing it in my organization.

In my last session (another on eon BlockChain) the idea of using BlockChain to effect quality measures was discussed. The main point of the speaker was that Blockchain may allow us to give agency to patients over their health data.

Another interesting point was that Blockchain may be able to allow us to dynamically observe quality measurement instead of just at point of care. This could lead to higher quality and lower costs.

Overall, the BlockChain talks were good, and kind of helped point me in the right direction on what questions to start asking about it.

Well, day 2 is in the books. One more day of educational sessions and exhibits!

HIMSS Day 1 Impressions

I was able to make it to 5 educational sessions today. And the good thing is that I learned something at each one. I think the highlight of the day for me was actually my first session titled, Stacking Predictive Models to Reduce Readmissions.

A couple of key things from that presentation was the idea of focusing on a patient that readmits, not just from a clinical perspective, but from a human perspective. There were lots of technology that they used to help the care coordinators identify who was going to readmit, but the why of the readmission was always done via human interaction. I think that may be the single most important thing to remember.

Something else that was mentioned was that the grou got their tool out quickly instead of trying to be perfect. It went through a couple of iterations in order to get a tool that was usable by all their various clinics.

Some other key takeaways from today:

- We need to focus on Augmented Human Intelligence instead of Artificial Intelligence (from How Machine Learning and AI Are Disrupting the Current Healthcare System)

- Don’t treat Cloud Service Providers as Plug and Play vendors (from HIPAA and a Cloud Computing Shared Security Model)

- Creation of a committee of ‘No’ to help flesh out ideas before they are implemented (from Intrapreneurship and the Approach to Innovation From Within)

- Think about how to operationalize insights from data, and not just explore the data (from Beyond BI: Building Rapid-Response Advanced Analytics Unit)

That’s a wrap on day 1 at HIMSS. Day 2 looks to be just as exciting (meet with some vendors, attend some more educational sessions, go to a sponsored luncheon).

An Introvert’s guide to large conferences ... or how I survived HIMSS 2018 (and 2017 and 2016)

The thing about HIMSS is that there are a lot of people. I mean ... a lot of people. More than 43k people will attend as speakers, exhibitors or attendees.

Let that sink in for a second.

No. Really. Let. That. Sink. In.

That’s more than the average attendance of a MLB game of 29 teams. It’s ridiculous.

As an introvert you know what will drain you and what will invigorate you. For me I need to be cautious of conferencing too hard. That is, I need to be aware of myself, my surroundings and my energy levels.

My tips are:

- Have a great playlist on your smart phone. I use an iPhone and get a subscription to Apple Music just for the conference. This allows me to have a killer set of music that helps to drown out the cacophony of people.

- Know when you’ve reached your limit. Even with some sweet tunes it’s easy to get drained. When you’re done you’re done. Don’t be a hero.

- Try to make at least one meaningful connection. I know, it’s hard. But it’s totally worth it. Other introverts are easy to spot because they’re the people on their smart phones pretending to write a blog post while listening to their sweet playlist. But if you can start a conversation, not small talk, it will be worth it. Attend a networking function that’s applicable to you and you’ll be able to find at least one or two people to connect with.

The other tips for surviving HIMSS are the same for any other conference:

- Don’t worry about how you’re dressed ... you will always be underdressed when compared to Hospital Administrators ... you’re in ‘IT’ and you dress like it

- Wear good walking shoes (see number 2 about being under dressed)

- Drink plenty of water

- Wash your hands and/or have hand sanitizer

- Accept free food when it’s offered

Ok. One day down. 3+ more to go!

Fixing the Python 3 Problem on my Raspberry Pi

In my last post I indicated that I may need to

reinstalling everything on the Pi and starting from scratch

While speaking about my issues with pip3 and python3. Turns out that the fix was easier than I though. I checked to see what where pip3 and python3 where being executed from by running the which command.

The which pip3 returned /usr/local/bin/pip3 while which python3 returned /usr/local/bin/python3. This is exactly what was causing my problem.

To verify what version of python was running, I checked python3 --version and it returned 3.6.0.

To fix it I just ran these commands to unlink the new, broken versions:

sudo unlink /usr/local/bin/pip3

And

sudo unlink /usr/local/bin/python3

I found this answer on StackOverflow and tweaked it slightly for my needs.

Now, when I run python --version I get 3.4.2 instead of 3.6.0

Unfortunately I didn’t think to run the --version flag on pip before and after the change, and I’m hesitant to do it now as it’s back to working.

ITFDB!!!

My wife and I love baseball season. Specifically we love the Dodgers and we can’t wait for Spring Training to begin. In fact, today pitchers and catchers report!

I’ve wanted to do something with the Raspberry Pi Sense Hat that I got (since I got it) but I’ve struggled to find anything useful. And then I remembered baseball season and I thought, ‘Hey, what if I wrote something to have the Sense Hat say “#ITFDB” starting 10 minutes before a Dodgers game started?’

And so I did!

The script itself is relatively straight forward. It reads a csv file and checks to see if the current time in California is within 10 minutes of start time of the game. If it is, then it will send a show_message command to the Sense Hat.

I also wrote a cron job to run the script every minute so that I get a beautiful scrolling bit of text every minute before the Dodgers start!

The code can be found on my GitHub page in the itfdb repository. There are 3 files:

Program.pywhich does the actual running of the scriptdata_types.pywhich defines a class used inProgram.pyschedule.csvwhich is the schedule of the games for 2018 as a csv file.

I ran into a couple of issues along the way. First, my development environment on my Mac Book Pro was Python 3.6.4 while the Production Environment on the Raspberry Pi was 3.4. This made it so that the code about time ran locally but not on the server 🤦♂️.

It took some playing with the code, but I was finally able to go from this (which worked on 3.6 but not on 3.4):

now = utc_now.astimezone(pytz.timezone("America/Los_Angeles"))

game_date_time = game_date_time.astimezone(pytz.timezone("America/Los_Angeles"))

To this which worked on both:

local_tz = pytz.timezone('America/Los_Angeles')

now = utc_now.astimezone(local_tz)

game_date_time = local_tz.localize(game_date_time)

For both, the game_date_time variable setting was done in a for loop.

Another issue I ran into was being able to display the message for the sense hat on my Mac Book Pro. I wasn’t ever able to because of a package that is missing (RTIMU ) and is apparently only available on Raspbian (the OS on the Pi).

Finally, in my attempts to get the code I wrote locally to work on the Pi I decided to install Python 3.6.0 on the Pi (while 3.4 was installed) and seemed to do nothing but break pip. It looks like I’ll be learning how to uninstall Python 3.4 OR reinstalling everything on the Pi and starting from scratch. Oh well … at least it’s just a Pi and not a real server.

Although, I’m pretty sure I hosed my Linode server a while back and basically did the same thing so maybe it’s just what I do with servers when I’m learning.

One final thing. While sitting in the living room watching DC Legends of Tomorrow the Sense Hat started to display the message. Turns out, I was accounting for the minute, hour, and day but NOT the month. The Dodgers play the Cubs on September 12 at 9:35 (according to the schedule.csv file anyway) and so the conditions to display were met.

I added another condition to make sure it was the right month and now we’re good to go!

Super pumped for this season with the Dodgers!

Using MP4Box to concatenate many .h264 files into one MP4 file: revisited

In my last post I wrote out the steps that I was going to use to turn a ton of .h264 files into one mp4 file with the use of MP4Box.

Before outlining my steps I said, “The method below works but I’m sure that there is a better way to do it.”

Shortly after posting that I decided to find that better way. Turns out, ~~it wasn’t really that much more work~~ it was much harder than originally thought.

The command below is a single line and it will create a text file (com.txt) and then execute it as a bash script:

~~(echo '#!/bin/sh'; for i in *.h264; do if [ "$i" -eq 1 ]; then echo -n " -add $i"; else echo -n " -cat $i"; fi; done; echo -n " hummingbird.mp4") > /Desktop/com.txt | chmod +x /Desktop/com.txt | ~/Desktop/com.txt~~

(echo '#!/bin/sh'; echo -n "MP4Box"; array=($(ls *.h264)); for index in ${!array[@]}; do if [ "$index" -eq 1 ]; then echo -n " -add ${array[index]}"; else echo -n " -cat ${array[index]}"; fi; done; echo -n " hummingbird.mp4") > com.txt | chmod +x com.txt

Next you execute the script with

./com.txt

OK, but what is it doing? The parentheses surround a set of echo commands that output to com.txt. I’m using a for loop with an if statement. The reason I can’t do a straight for loop is because the first h264 file used in MP4Box needs to have the -add flag while all of the others need the -cat flag.

Once the file is output to the com.txt file (on the Desktop) I pipe it to the chmod +x command to change it’s mode to make it executable.

Finally, I pipe that to a command to run the file ~/Desktop/com.txt

I was pretty stoked when I figured it out and was able to get it to run.

The next step will be to use it for the hundreds of h264 files that will be output from my hummingbird camera that I just installed today.

I’ll have a post on that in the next couple of days.

Using MP4Box to concatenate many .h264 files into one MP4 file

The general form of the concatenate command for MP4Box is:

MP4Box -add <filename>.ext -cat <filename>.ext output.ext1

When you have more than a couple of output files, you’re going to want to automate that -cat part as much as possible because let’s face it, writing out that statement even more than a couple of times will get really old really fast.

The method below works but I’m sure that there is a better way to do it.

- echo out the command you want to run. In this case:

(echo -n "MP4Box "; for i in *.h264; do echo -n " -cat $i"; done; echo -n " hummingbird.mp4") >> com.txt

- Edit the file

com.txtcreated in (1) so that you can change the first-catto-add

vim com.txt

- While still in

vimediting thecom.txtfile add the#!/bin/shto the first line. When finished, exit vim2 - Change the mode of the file so it can run

chmod +x com.txt

- Run the file:

./com.txt

Why am I doing all of this? I have a Raspberry Pi with a Camera attachment and a motion sensor. I’d like to watch the hummingbirds that come to my hummingbird feeder with it for a day or two and get some sweet video. We’ll see how it goes.

The Sports Center Effect

This last weekend was the divisional round of the NFL playoffs. There were 3 really good games and the game that the Patriot played in. This is unfortunate because I only had the Patriots game on the calendar for the weekend so that meant other things could get scheduled whenever and I would end up missing many, if not all, of the other games.

Sunday had 2 amazing games. The Steelers lost to the Jaguars in an upset and I got to see the last drive that put the Steelers down by a Field Goal as time expired. It was simply amazing to see how hard they played even though they must have known that they weren’t going to win.

When I got home from being out the New Orleans at Minnesota game was at half time with Minnesota up 17-0. It looked like it was going to be a route and I was glad that I wasn’t really watching it.

I started to do the chores that needed to be done (laundry, straightening up, getting ready for the week) and had the game on in the background.

And then the improbable started to happen. Drew Brees played an amazing half of football and all of a sudden it’s 21-20 New Orleans. After a couple of field goals are exchanged it’s 24-23 New Orleans with Minnesota in possession of the ball.

Case Keenum had made a couple of errors earlier in the game (one interception lead to a touch down and really helped the Saints get back in the game). It looked like he was on track to do something similarly ill-advised.

Then, with 10 seconds left the bar is snapped and he passes the ball to Stefon Diggs who catches the ball. And just as he catches the ball Marcus Williams, a defensive back for New Orleans is cued up to make an ordinary tackle in an extraordinary situation.

I was only on my high school football team for 2 years, but one thing the coaches were always on us about was wrapping up the ball carrier when we were going to tackle. “Wrap him up” they’d scream at us. Over and over again.

It became something we did just so they’d stop yelling at us (for that anyway).

So Marcus Williams is getting ready to tackle Stefon Diggs and all he has to do is “Wrap him up!” But something inside of Willliams’ head is saying, “Sports Center highlight” and instead of going for the boring, but effective arm wrapping tackle, he tried to hit Diggs with his shoulder to hopefully get the ball to be knocked loose.

Instead, he whiffs by Diggs who spins, plants his hand on the ground to stay up and proceeds to run 60 yards for the game winning, walk-off, touchdown.

I truly believe that Williams was thinking about how cool it would be to get on Sport Center when he was deciding how to tackle Diggs, and that cost the Saints the game.

Dear Sports center, stop making our sports be bad and our athletes make dumb decisions. Can you just go away now. Ok, thanks, bye

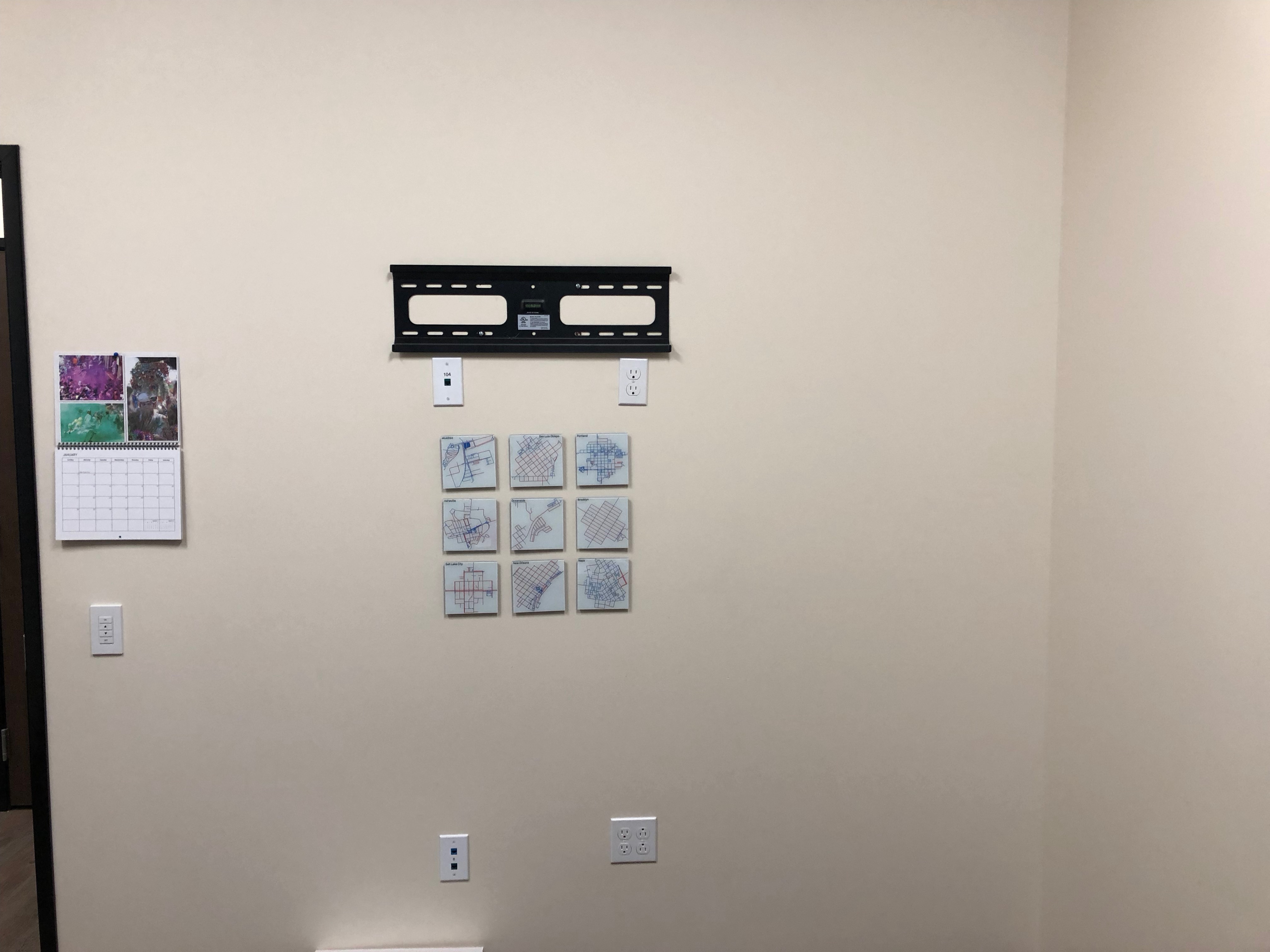

My Map Art Project

I’d discovered a python package called osmnx which will take GIS data and allow you to draw maps using python. Pretty cool, but I wasn’t sure what I was going to do with it.

After a bit of playing around with it I finally decided that I could make some pretty cool Fractures.

I’ve got lots of Fracture images in my house and I even turned my diplomas into Fractures to hang up on the wall at my office, but I hadn’t tried to make anything like this before.

I needed to figure out what locations I was going to do. I decided that I wanted to do 9 of them so that I could create a 3 x 3 grid of these maps.

I selected 9 cities that were important to me and my family for various reasons.

Next writing the code. The script is 54 lines of code and doesn’t really adhere to PEP8 but that just gives me a chance to do some reformatting / refactoring later on.

In order to get the desired output I needed several libraries:

osmnx (as I’d mentioned before)

matplotlib.pyplot

numpy

PIL

If you’ve never used PIL before it’s the ‘Python Image Library’ and according to it’s home page it

adds image processing capabilities to your Python interpreter. This library supports many file formats, and provides powerful image processing and graphics capabilities.

OK, let’s import some libraries!

import osmnx as ox, geopandas as gpd, os

import matplotlib.pyplot as plt

import numpy as np

from PIL import Image

from PIL import ImageFont

from PIL import ImageDraw

Next, we establish the configurations:

ox.config(log_file=True, log_console=False, use_cache=True)

The ox.config allows you to specify several options. In this case, I’m:

- Specifying that the logs be saved to a file in the log directory

- Suppress the output of the log file to the console (this is helpful to have set to

Truewhen you’re first running the script to see what, if any, errors you have. - The

use_chache=Truewill use a local cache to save/retrieve http responses instead of calling API repetitively for the same request URL

This option will help performance if you have the run the script more than once.

OSMX has many different options to generate maps. I played around with the options and found that the walking network within 750 meters of my address gave me the most interesting lines.

AddressDistance = 750

AddressDistanceType = 'network'

AddressNetworkType = 'walk'

Now comes some of the most important decisions (and code!). Since I’ll be making this into a printed image I want to make sure that the image and resulting file will be of a high enough quality to render good results. I also want to start with a white background (although a black background might have been kind of cool). I also want to have a high DPI. Taking these needs into consideration I set my plot variables:

PlotBackgroundColor = '#ffffff'

PlotNodeSize = 0

PlotFigureHeight = 40

PlotFigureWidth = 40

PlotFileType = 'png'

PlotDPI = 300

PlotEdgeLineWidth = 10.0

I played with the PlotEdgeLineWidth a bit until I got a result that I liked. It controls how thick the route lines are and is influenced by the PlotDPI. For the look I was going for 10.0 worked out well for me. I’m not sure if that means a 30:1 ratio for PlotDPI to PlotEdgeLineWidth would be universal but if you like what you see then it’s a good place to start.

One final piece was deciding on the landmarks that I was going to use. I picked nine places that my family and I had been to together and used addresses that were either of the places that we stayed at (usually hotels) OR major landmarks in the areas that we stayed. Nothing special here, just a text file with one location per line set up as

Address, City, State

For example:

1234 Main Street, Anytown, CA

So we just read that file into memory:

landmarks = open('/Users/Ryan/Dropbox/Ryan/Python/landmarks.txt', 'r')

Next we set up some scaffolding so we can loop through the data effectively

landmarks = landmarks.readlines()

landmarks = [item.rstrip() for item in landmarks]

fill = (0,0,0,0)

city = []

The loop below is doing a couple of things:

- Splits the landmarks array into base elements by breaking it apart at the commas (I can do this because of the way that the addresses were entered. Changes may be needed to account for my complex addresses (i.e. those with multiple address lines (suite numbers, etc) or if local addresses aren’t constructed in the same way that US addresses are)

- Appends the second and third elements of the

partsarray and replaces the space between them with an underscore to convertAnytown, CAtoAnytown_CA

<!-- -->

for element in landmarks:

parts = element.split(',')

city.append(parts[1].replace(' ', '', 1)+'_'+parts[2].replace(' ', ''))

This next line isn’t strictly necessary as it could just live in the loop, but it was helpful for me when writing to see what was going on. We want to know how many items are in the landmarks

rows = len(landmarks)

Now to loop through it. A couple of things of note:

The package includes several graph_from_... functions. They take as input some type, like address, i.e. graph_from_address (which is what I’m using) and have several keyword arguments.

In the code below I’m using the ith landmarks item and setting the distance, distance_type, network_type and specifying an option to make the map simple by setting simplify=‘true’

To add some visual interest to the map I’m using this line

ec = ['#cc0000' if data['length'] >=100 else '#3366cc' for u, v, key, data in G.edges(keys=True, data=True)]

If the length of the part of the map is longer than 100m then the color is displayed as #cc0000 (red) otherwise it will be #3366cc (blue)

The plot_graph is what does the heavy lifting to generate the image. It takes as input the output from the graph_from_address and ec to identify what and how the map will look.

Next we use the PIL library to add text to the image. It takes into memory the image file and saves out to a directory called /images/. My favorite part of this library is that I can choose what font I want to use (whether it’s part of the system fonts or a custom user font) and the size of the font. For my project I used San Francisco at 512.

Finally, there is an exception for the code that adds text. The reason for this is that when I was playing with adding text to the image I found that for 8 of 9 maps having the text in the upper left hand corner worker really well. It was just that last one (San Luis Obispo, CA) that didn’t.

So, instead of trying to find a different landmark, I decided to take a bit of artistic license and put the San Luis Obispo text in the upper right hard corner.

Once the script is all set simply typing python MapProject.py in my terminal window from the directory where the file is saved generated the files.

All I had to do what wait and the images were saved to my /images/ directory.

Next, upload to Fracture and order the glass images!

I received the images and was super excited. However, upon opening the box and looking at them I noticed something wasn’t quite right

[caption id="attachment_188" align="alignnone" width="2376"]![Napa with the text slightly off the image]images/uploads/2018/01/Image-12-16-17-6-55-AM.jpeg){.alignnone .size-full .wp-image-188 width="2376" height="2327"} Napa with the text slightly off the image[/caption]

As you can see, the name place is cut off on the left. Bummer.

No reason to fret though! Fracture has a 100% satisfaction guarantee. So I emailed support and explained the situation.

Within a couple of days I had my bright and shiny fractures to hang on my wall

[caption id="attachment_187" align="alignnone" width="2138"]![Napa with the text properly displaying]images/uploads/2018/01/IMG_9079.jpg){.alignnone .size-full .wp-image-187 width="2138" height="2138"} Napa with the text properly displaying[/caption]

So that my office wall is no longer blank and boring:

but interesting and fun to look at

My Outlook Review Process

In a previous post I spoke about my use of Rules and Custom Actions in Outlook. In this post I’ll talk about my Review process which I adapted from David Allen’s Getting Things Done methodology.

There are several decisions that need to be made when reviewing email:

- Is this for me?

- Is it something that I need to do right now?

If the email is not for me then I need to decide who it is for. Once I have made that decision I Delegate the responsibility of the email.

I will forward the email (or write up a new email with the salient details) and send it to the person that I’m delegating the responsibility to. But here’s the trick … I have a rule that if I put my email address in the BCC line it will be moved to a folder called @waiting and flagged as a follow up item.

I then review the @waiting folder once a day or every other day and will send a follow up email if needed OR delete the email once the person has responded to me.

The next decision I need to make is “Do I need to do this right now?” If I don’t, then I’ll Defer a response or action to the needed time. Unfortunately Outlook doesn’t have a good defer mechanism like my beloved OmniFocus, so I will convert the email into a meeting and remove the attendees other than me to a data and time when I need to work on the email.

Finally, the email may require me to Do something right now. In that case, I focus fully on the requirements of the email and do it.

This may involve scheduling a meeting, or supplying times when I’m available for a meeting, or it might be answering a question asked of me. I will do any necessary research OR as I start working on the email that requires a decision I’ll discover that I don’t have enough information to actually make that decision.

When that happens I’ll send an email (either forwarding or creating a new one) to the person I think that can supply the information and, you guessed it, I’ll BCC myself so that the email is put into my @waiting folder.

Using the above methodology I’m able to manage the massive amounts of email that I receive. Hopefully this helps someone out there who is drowning in email.

Page 19 / 24